I Was the Style Police in Code Reviews for Years. I Was Wrong.

After tracking 200 PRs, I found most review comments were just noise. Here's the 8-question checklist that cut our turnaround time nearly in half.

Dr. Elena Vasquez

Security researcher with a PhD in Computer Science from Stanford, specializing in application security and cryptography. Elena makes complex security topics accessible without dumbing them down.

Last year, I sat in a post-mortem meeting that completely changed how I think about code reviews. We'd just shipped a critical bug to production. The kicker? That exact code had been reviewed by three senior engineers over six days. Six days of back-and-forth comments, nitpicking variable names, debating whether a helper function should live in a utils file or a services directory.

Nobody caught the actual bug: a simple null check.

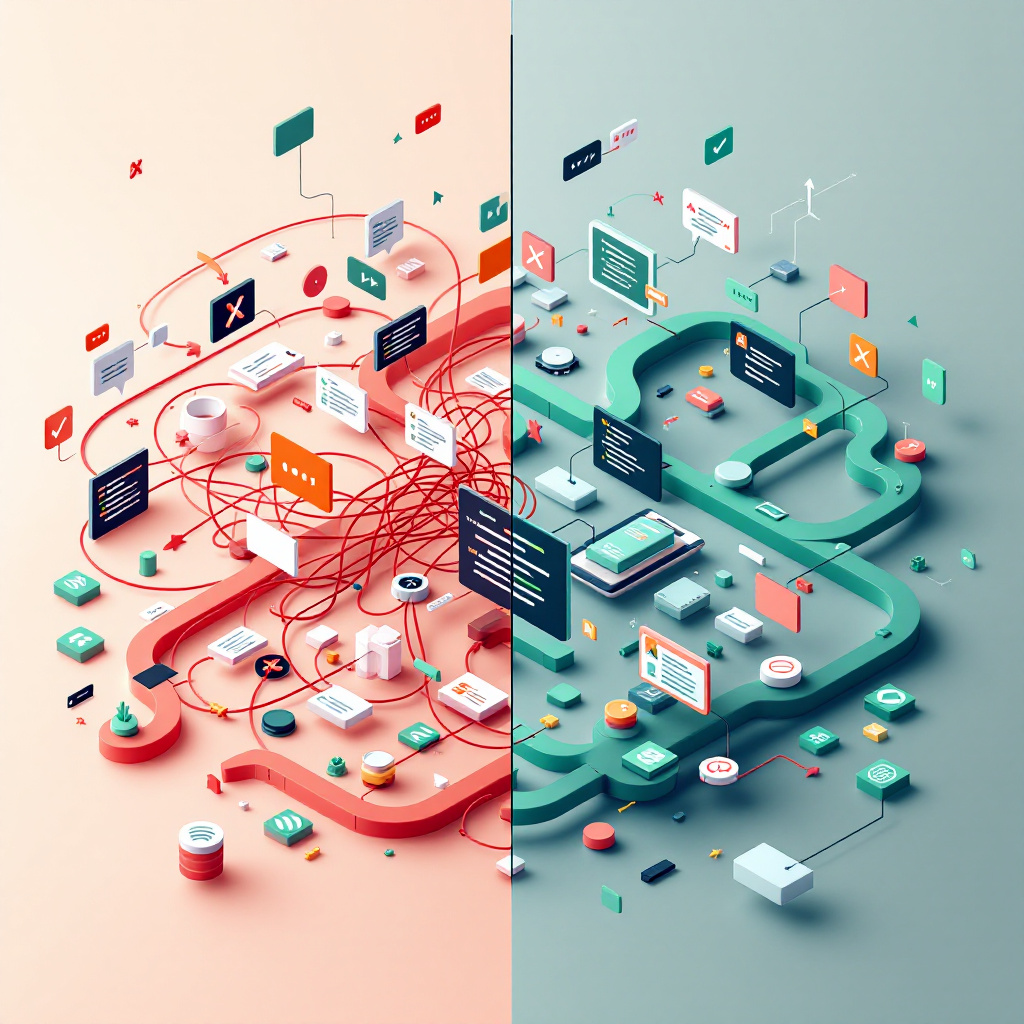

That moment forced me to confront something uncomfortable. Our "thorough" review process wasn't making our code better. It was making our team slower, more frustrated, and honestly, less careful. When everything gets flagged as important, nothing is.

Three months of research, failed experiments, and rebuilding from scratch followed. What emerged was a senior developer code review checklist that transformed how my team works. PR turnaround dropped significantly, from over four days on average to around two and a half. But more importantly, our production incident rate went down, not up.

Let me walk you through what we learned about what actually matters in code review and what's just noise.

The Code Review Anti-Patterns We Were All Guilty Of (And the Data That Proved It)

Before I share what works, let's talk about what doesn't. I started tracking our review comments across roughly 200 PRs, categorizing each into buckets. The results were honestly embarrassing.

Based on my team's internal analysis, the majority of our comments fell into these categories:

- Style preferences that didn't match any documented standard ("I prefer early returns," sound familiar?)

- Asking questions that could be answered by reading the linked ticket

- Suggesting refactors that would touch code outside the PR's scope

- Relitigating architecture decisions already made in design docs

Less than a quarter of comments addressed actual bugs, security issues, or significant maintainability concerns. Everything else? Neutral observations or questions for understanding.

We were spending the bulk of our review energy on things that didn't matter.

When your solution for code reviews taking too long becomes the problem itself, you've inverted the entire purpose of the process. Reviews exist to catch problems before production. Not to prove how much you know, not to enforce personal preferences, not to redesign the system one PR at a time.

The real anti-patterns we identified:

- The Archaeology Dig: Reviewing code history instead of the actual changes

- The Perfect Enemy: Blocking PRs because they don't fix every problem in the file

- The Fishing Expedition: Asking questions you could answer yourself in 30 seconds

- The Style Police: Enforcing preferences that aren't in your linter config

- The Scope Creep: Requesting changes unrelated to the PR's purpose

Once we named these patterns, we started catching ourselves doing them. That awareness alone cut unnecessary comments substantially.

The 8-Question Senior Developer Checklist: What Actually Matters in 2024

After analyzing what actually prevented production issues versus what just made reviewers feel productive, I developed this checklist. I literally keep it open in a browser tab during every review.

Question 1: Does this code do what the ticket says it should do?

Sounds obvious, right? It's not. Every review starts with me re-reading the ticket. I'm checking for missing requirements, misunderstood edge cases, or scope drift. This catches more real bugs than any amount of code scrutiny.

Question 2: What happens when this fails?

Every external call, every database operation, every user input: what's the failure mode? Is it handled? Is it logged? Will someone get paged at 3 a.m. with no context? [Link: error handling best practices]

Question 3: Can this break existing functionality?

My focus is on what this code touches, not just what it adds. Changed a shared utility? Better check who else uses it. Modified a database schema? What happens to existing data?

Question 4: Are there security implications?

With my background in cryptographic protocols and security research, this is where I probably spend disproportionate time. But honestly, most security issues I catch aren't sophisticated. They're SQL injection through unsanitized inputs, API keys in code, or missing authentication checks on endpoints. The basics. [Link: common security vulnerabilities]

Question 5: Will this be debuggable at 3 a.m.?

Not "is this code elegant?" but "when this breaks in production, can someone figure out what happened?" Are there logs? Are error messages useful? Is the control flow followable?

Question 6: Is there test coverage for the risky parts?

Not "is there 100% coverage?" but rather coverage for the risky parts: the business logic, the edge cases, the failure paths. Untested happy paths are usually fine. Untested error handling? That'll haunt you.

Question 7: Does this introduce new dependencies or patterns the team should discuss?

A new library? A new way of doing something? Maybe that's fine, but it shouldn't slip in without awareness. These are synchronous conversations, not PR comments.

Question 8: Would I be comfortable deploying this on a Friday?

My gut check. If something about this code makes me nervous, I need to figure out why. And if I can't articulate a specific concern? I approve it.

Eight questions. Everything else is either automation (linting, formatting, type checking) or it's noise.

The Art of Constructive Feedback: Turning Code Reviews into Mentoring Moments

When you're mentoring junior developers through code reviews, how you deliver feedback matters as much as what feedback you give. I've watched senior engineers leave technically correct comments that made junior devs feel stupid, defensive, or just confused.

The framework I use for giving constructive feedback:

Separate the must-fix from the nice-to-have. Literally prefix comments with labels: [blocking], [suggestion], [question], [nit]. Eliminates the guessing game of "do I need to address this to get approved?"

Explain the why. Always. "This could cause a race condition" is better than "add a lock here." Better still: "This could cause a race condition if two users submit simultaneously. Here's a scenario..." Teaching scales. Orders don't.

Ask questions instead of making statements. "What happens if the list is empty?" is less confrontational than "This will throw if the list is empty." It also prompts the author to think through the problem, which is more valuable than just fixing it.

When you need to give negative feedback, be specific and solution-oriented. Bad: "This approach is wrong." Good: "This approach will hit the database N+1 times for each user. We could batch this query like we do in UserService.loadProfiles(). Want me to show you that pattern?"

And sometimes, the best mentoring is a Slack call, not a comment thread. If I'm leaving more than three related comments, I hop on a call instead. Fifteen minutes of conversation beats two days of async back-and-forth.

Time Management Tactics: Async Reviews and the 25-Minute Review Block System

Confession: I used to treat code reviews as interruptions, something to squeeze between "real work." That mindset guaranteed shallow reviews and frustrated teammates waiting for feedback.

What actually works for me? Dedicated review blocks.

The 25-Minute Review Block System:

Two 25-minute blocks daily. That's it. First thing in the morning and right after lunch. During those blocks, reviews are my primary work, not an interruption.

Why 25 minutes? My attention starts degrading after about 20-30 minutes on a single task (and this aligns with what many productivity experts suggest). A 25-minute block lets me deeply review one or two medium PRs or knock out three or four small ones.

Asynchronous code review practices that actually help:

- Set expectations on response time. Our team aims for first-pass review within a few hours. Not instant, but predictable.

- Front-load context. PR descriptions should let me understand the change without reading the code first. If they don't, I ask for a better description before reviewing.

- Use draft PRs for early feedback. Junior devs especially benefit from a quick "you're on the right track" before they polish code that's headed the wrong direction.

- Timebox deep review. Most PRs shouldn't take more than 15-20 minutes. If I've been reviewing for 30 minutes, the PR is probably too big. And that's feedback in itself.

The dirty secret: Most PRs don't need senior-level scrutiny. We implemented a tiered system where simple PRs (config changes, copy updates, small bug fixes) only need one reviewer of any level. Only PRs touching critical paths or introducing new patterns need senior review.

Freed up a significant portion of senior review time for the PRs that actually needed experienced eyes.

The Reversibility Matrix: When to Block PRs vs. When to Approve and Iterate

Finding problems isn't the hardest skill in effective pull request review. Deciding which problems warrant blocking a PR? That's the hard part.

What I call the Reversibility Matrix is a simple mental model for making that call.

Block the PR when:

- Fixing the issue post-merge will be expensive or risky (database migrations, public API changes, security vulnerabilities)

- The code is provably incorrect for stated requirements

- Existing functionality will likely break

- Data loss or corruption could occur

Approve with comments when:

- You're looking at a code smell, not a bug

- Post-merge fixes would be straightforward

- Performance concerns need measurement, not speculation

- Suggestions reflect preference, not requirement

Create a follow-up ticket when:

- The issue exists but is out of scope for this PR

- Broader discussion or design is needed

- Addressing it now would significantly delay the PR

Sometimes shipping imperfect code quickly beats shipping perfect code slowly. That's just reality. Every day in review limbo is a day without real user feedback or production testing.

Not an excuse to ship garbage. Just being honest about what actually needs to be fixed now versus what can be improved later.

Follow-up ticket items get tracked on my end. If they consistently never get done, that's a signal. If they get addressed in subsequent iterations, the system is working.

Improving your team's code review culture isn't about mandating a checklist. It's about shifting how your team thinks about what reviews are for.

Your 30-day implementation plan:

Week 1: Measure your baseline. Track how long PRs sit in review. Categorize your review comments. You can't improve what you don't measure, and prepare to be surprised by what you find.

Week 2: Introduce the checklist. Share the 8-question framework with your team. Make it visible. Use it in your own reviews and reference which questions you're addressing in your comments.

Week 3: Implement review blocks. Experiment with dedicated review time. Get feedback from your team on what timing works. Agree on expected response times.

Week 4: Calibrate the Reversibility Matrix. Have a team discussion about what warrants blocking PRs versus approving with comments. Document decisions. Review some past contentious PRs through this lens.

Ongoing: Periodically review your review metrics. Are things improving? What patterns keep recurring?

Shorter reviews aren't the goal. Better ones are, and sometimes those actually take longer.

Our significant time reduction wasn't from reviewing less carefully. It was from reviewing more intentionally. We still catch bugs. We still mentor juniors. We still maintain code quality.

We just stopped pretending that thoroughness means reviewing everything. Started focusing on what actually matters.

What's your team's biggest code review pain point? I'd genuinely love to hear what's working or not working for you. Some of the best code review techniques I've learned came from teams trying things I never would have thought of.

Looking to level up your code review practices? Start with one change this week. Measure the results. Iterate. That's the whole secret.

Related Articles

The War Story Tangent That Lost Me a Staff Engineer Offer

I've watched senior engineers bomb system design interviews for 2 years. Your production experience might actually be the problem. Here's why.

I Got Rejected for Staff Twice. Here's What Finally Worked.

Got rejected for staff engineer twice before figuring out what committees actually evaluate. Here's the 18-month timeline and packet strategy that worked.

Why I Switched from Notion to Obsidian (And What I Miss)

I tested 7 PKM tools for coding work. Obsidian won for its local Markdown files and Git support, but Notion still does one thing better.

Comments (0)

Leave a comment

No comments yet. Be the first to share your thoughts!