Copilot Made My Bug Fixes 12% Slower (And Other Surprises from 365 Days of Tracking)

365 days of tracking every Copilot suggestion revealed a 15% overall gain—but bug fixes took 12% longer. Here's the task-by-task breakdown.

Dorothy Williams

Engineering leader with 30+ years in tech, from mainframes to microservices. Dorothy brings historical perspective to modern development and mentors the next generation of tech leaders.

I've been writing code since before most of today's junior developers were born. My career started on COBOL at IBM in 1991, then moved through C++, Java, Python, and whatever else the industry threw at us. After thirty-plus years, I've watched enough "revolutionary" tools come and go that skepticism comes naturally when anything promises to transform how we code.

So when GitHub Copilot started gaining traction, breathless enthusiasm wasn't my reaction. Instead, I did what any engineer with too much time and a love of data should do: I tracked everything. Every single day for 365 days.

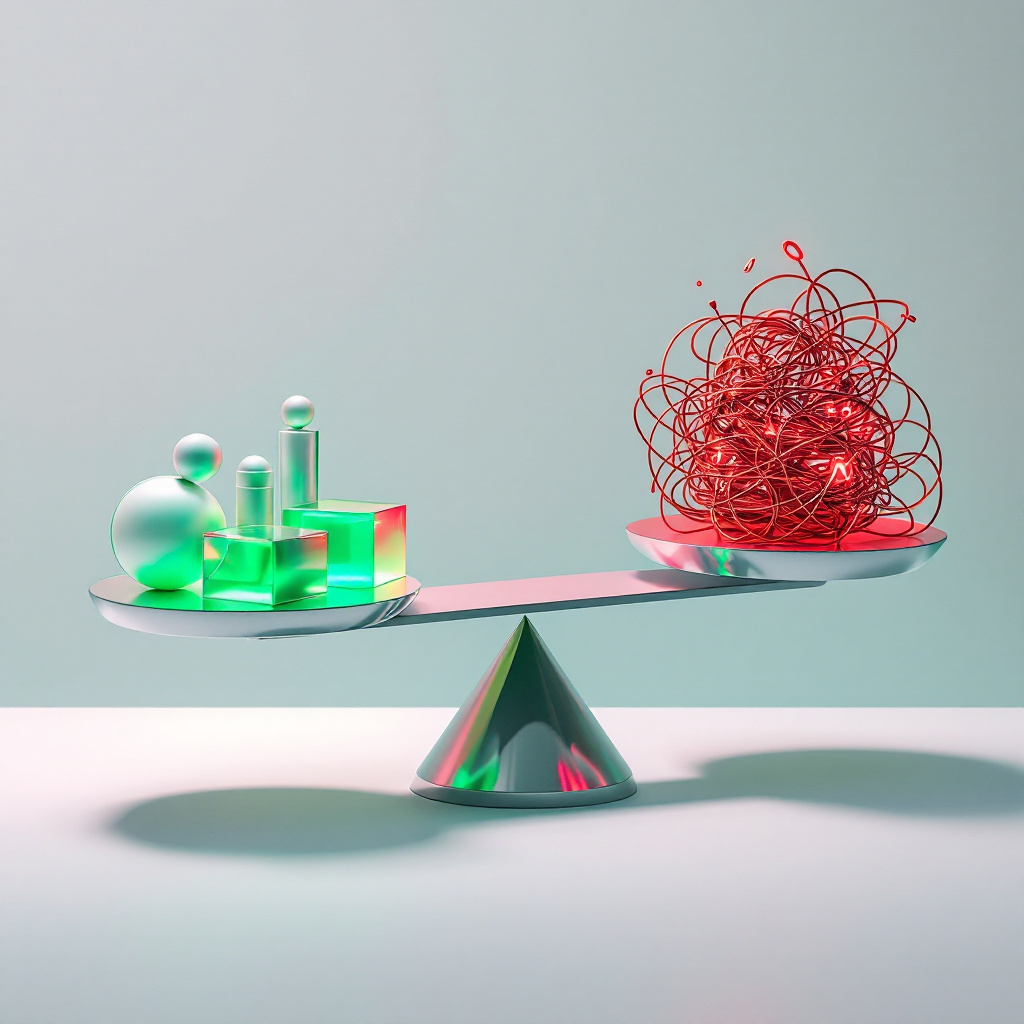

What follows is my honest long-term GitHub Copilot review, and you won't find the usual "it's amazing!" or "it's useless!" takes here. The truth, as it usually does, sits somewhere in the messy middle. And the patterns that emerged surprised even me.

What did I discover about whether GitHub Copilot actually saves time, where it genuinely helps, and where it might be making you a worse developer? Let me walk you through it.

My Methodology: How I Measured Time Savings, Error Rates, and Actual Productivity Impact

Here's how I tracked this, because methodology matters.

A combination of RescueTime for automatic tracking and a custom spreadsheet where I logged daily observations formed my system. Every coding session, I noted:

- Task type (new feature, bug fix, refactoring, boilerplate, tests)

- Language and framework

- Copilot suggestions accepted vs. rejected

- Time spent fixing Copilot-generated code

- Bugs later traced back to Copilot suggestions

Control periods were essential too. Every fourth week, I disabled Copilot entirely and worked the old-fashioned way. This gave me baseline comparisons.

Now, I'll be honest: this isn't a peer-reviewed study. It's one developer's experience. But it's 365 days of actual data from real projects, not a weekend test on toy applications. My work during this period included Python backend services, some TypeScript frontend work, and consulting projects across various codebases.

The Numbers Don't Lie: Real Productivity Gains Broken Down by Task Type

Here's my summary after a full year:

Overall time savings: approximately 15%

That's it. Not the 55% some claim. Not the "doubled my productivity" stories you see on Twitter. About 15%.

But that average hides a lot of variation. Breaking it down by task type tells the real story:

| Task Type | Time Change | Notes |

|---|---|---|

| Boilerplate code | ~30% faster | Copilot's strongest area |

| Unit tests | ~25–30% faster | Especially for predictable patterns |

| New feature development | ~5–10% faster | Mixed results |

| Bug fixing | +12% slower | Yes, slower |

| Complex refactoring | +18% slower | Significant distraction |

That's the messy middle I mentioned. When people ask whether GitHub Copilot is worth it for developers, my answer is: it depends entirely on what kind of coding you spend your time doing.

If you write lots of boilerplate and tests, the real-world GitHub Copilot productivity gains are substantial. If you're mostly debugging legacy systems or doing architectural refactoring, you might actually be better off without it.

Where Copilot Excels: The 4 Scenarios Where It Genuinely Saves Serious Time

After a year of obsessive tracking, four scenarios emerged where Copilot consistently delivers value:

1. Standard CRUD Operations and Boilerplate

Writing another REST endpoint? Setting up database models? The tool handles these like a seasoned assistant who's written the same code a thousand times, because it literally has. I'd type a function signature and watch it generate exactly what I needed about 80% of the time.

2. Unit Test Generation

This one surprised me. I'd write a function, start typing the test, and Copilot would suggest comprehensive test cases I might have missed. Not perfect, but it's a solid starting point. [Link: writing effective unit tests]

3. Unfamiliar APIs and Libraries

Working with a new library? Copilot often knows the common patterns better than I do initially. It's like having a colleague who's already used every npm package in existence. Just don't trust it blindly, because sometimes it suggests deprecated methods or outdated syntax.

4. Regex and String Manipulation

Three decades of coding under my belt, and I still have to look up regex syntax. Copilot handles these beautifully. Type a comment describing what you want to match, and there's your pattern. For some folks, this alone might justify the subscription.

Where Copilot Fails: Hidden Costs, Bad Habits, and the Debugging Trap

Now for the GitHub Copilot pros and cons after using it extensively. The cons don't show up immediately. They creep in over months.

The Debugging Trap

Here's what happened repeatedly: Copilot would suggest code that looked right, passed a quick visual review, and I'd accept it. Later, a bug would surface. Tracking it down took longer than writing the code manually would have, because I didn't have the same mental model of what the code was doing.

After month three, I started noticing something troubling. I was debugging code I didn't fully understand. That's a bad sign.

The Atrophy Problem

Something subtle happened around month six. I caught myself struggling with tasks I used to do automatically. My brain had started outsourcing basic pattern completion. During my control weeks with Copilot disabled, I felt slower than I'd been before starting the experiment.

Is this a big deal? I'm not sure yet. But it concerns me, especially for newer developers who might never build certain mental muscles in the first place.

Security Suggestions That Aren't

My tracking identified three instances where Copilot suggested code with potential security vulnerabilities: SQL injection patterns, improper input validation, and hardcoded secrets in test code that could have slipped through. [Link: secure coding practices] Always review generated code with security in mind.

Context Window Limitations

Copilot doesn't understand your whole codebase. It sees the current file and some surrounding context. What results are suggestions that work syntactically but violate your project's patterns, naming conventions, or architectural decisions.

GitHub Copilot vs. ChatGPT for Coding: When to Use Each

People frequently ask about GitHub Copilot vs. ChatGPT for coding, and my usage patterns for each have become quite distinct.

Use Copilot when:

- You're in flow and want inline suggestions

- Writing predictable, pattern-based code

- You need quick completions without context switching

- Working on well-defined, small tasks

Use ChatGPT (or Claude) when:

- You need to explain a complex problem and get architectural advice

- Debugging something weird and want to think through it

- Learning a new concept or approach

- You want to paste larger code blocks for review

My developer experience with GitHub Copilot after one year taught me these tools serve different purposes. Copilot is your autocomplete with significantly expanded capabilities. ChatGPT is your rubber duck that talks back.

On a typical day, I use Copilot maybe 20 times for quick completions and ChatGPT 3–4 times for deeper problem-solving.

Is the Subscription Worth $10/Month? My ROI Calculation Framework

Let's do the math on whether the GitHub Copilot subscription is worth the price.

At $10/month or $100/year (the individual plan), you're paying about 27 cents per day. If Copilot saves you just 3 minutes of work daily, and your time is worth $50/hour, you're breaking even. Anything beyond that is profit.

My approximately 15% time savings on approximately 30 hours of weekly coding equals about 4.5 hours per week. That's significant.

But here's my framework for different developer types:

Worth it if you:

- Write lots of boilerplate or tests

- Work across multiple languages and frameworks

- Are comfortable reviewing and validating AI suggestions

- Bill hourly or have measurable productivity metrics

Probably not worth it if you:

- Primarily do debugging and maintenance

- Work on highly specialized or unusual codebases

- Are a newer developer still building fundamentals

- Find the suggestions more distracting than helpful

For the question of whether GitHub Copilot is worth it for developers in 2025, my answer is: run the numbers for your specific situation. Track your own usage for a month. The free trial exists for a reason.

After a full year and this GitHub Copilot review after one year of daily use, here's where I land:

Copilot is a legitimate productivity tool with real benefits and real costs. It's not magic, and it won't replace developers anytime soon. It also won't make you twice as productive. The truth is somewhere around 10–20% better for most use cases, with significant variation based on what you're doing.

My subscription stays active. Time saved on boilerplate and tests justifies the cost for my work. But caution has increased around when I accept suggestions, and I disable it entirely for complex debugging sessions.

Your 30-day test:

- Sign up for the free trial

- Create a simple tracking spreadsheet

- Log your task types, acceptance rate, and any bugs you trace back to suggestions

- Run one week with Copilot disabled for comparison

- Calculate your actual time savings (or losses)

Don't trust my numbers or anyone else's. How much productivity GitHub Copilot actually adds for you depends on how you code, what you code, and how carefully you validate its suggestions.

After thirty years in this industry, I've learned one thing about tools: they're only as good as the judgment of the person using them. Copilot is no different.

Have questions about my methodology or want to compare notes? I'm always happy to talk shop with fellow engineers who care about measuring what actually works.

Related Articles

The War Story Tangent That Lost Me a Staff Engineer Offer

I've watched senior engineers bomb system design interviews for 2 years. Your production experience might actually be the problem. Here's why.

I Got Rejected for Staff Twice. Here's What Finally Worked.

Got rejected for staff engineer twice before figuring out what committees actually evaluate. Here's the 18-month timeline and packet strategy that worked.

Why I Switched from Notion to Obsidian (And What I Miss)

I tested 7 PKM tools for coding work. Obsidian won for its local Markdown files and Git support, but Notion still does one thing better.

Comments (0)

Leave a comment

No comments yet. Be the first to share your thoughts!